Classical Software Engineer: 1950 - 2030

Software engineers are beneficiaries of the Von Neumann architecture. However, this architecture is becoming less relevant. Instead, a ‘natural language computer’ is emerging. How long the traditional software engineering profession will continue to exist in this new era of computing?

Von Neumann architecture, which has been the foundation of modern computing, is becoming less relevant. Instead, a new type of computer that uses natural language processing is emerging. How long the traditional software engineering profession will continue to exist in this new era of computing?

Software Engineers: Highly Paid Digital Porters

The essence of modern software engineering has been centered around transporting data between computing components like storage, memory, registers and CPUs - not much different than porters carrying goods. In the early days, this required extensive training to manually optimize data flows using low-level constructs like queues, stacks, and bespoke algorithms. Only engineers intimate with the underlying hardware could reliably orchestrate the movement of bits and bytes.

However, over time languages and frameworks abstracted away the gritty details. Fewer and fewer engineers truly comprehend the arcane workings of the machines they program. The rise of cloud computing has further obscured the underlying architecture. Engineers merely shuffle data between distributed web services without much hard awareness.

With the advent of large language models, even the CPU is fading behind a cognitive layer of natural language interaction. The Von Neumann substrates that long fed the software engineering profession are being wholesale hidden behind intelligent interfaces. This calls into question the value of specialized skills in manually marshaling bits and bytes.

Much coding has become a game of matching inputs and outputs, gluing together modules and swirling data amidst clouds. Structures and algorithms now bubble up from frameworks rather than mental models. The professional barrier that once distinguished software "porter" work has eroded. Yet compensation remains disproportionately high for the manifold automatable tasks that constitute modern software development. As layers upon layers of abstraction emerge, expect market forces to undermine once rare skills now made commonplace by advancing technology.

Would the Software Engineer profession in "classical" sense continue to exist?

Here is my attempt to rewrite the passage to explain how the "classical" software engineer profession was born in the 1950s:

The Birth of Software Engineers in the Golden Age of Computing

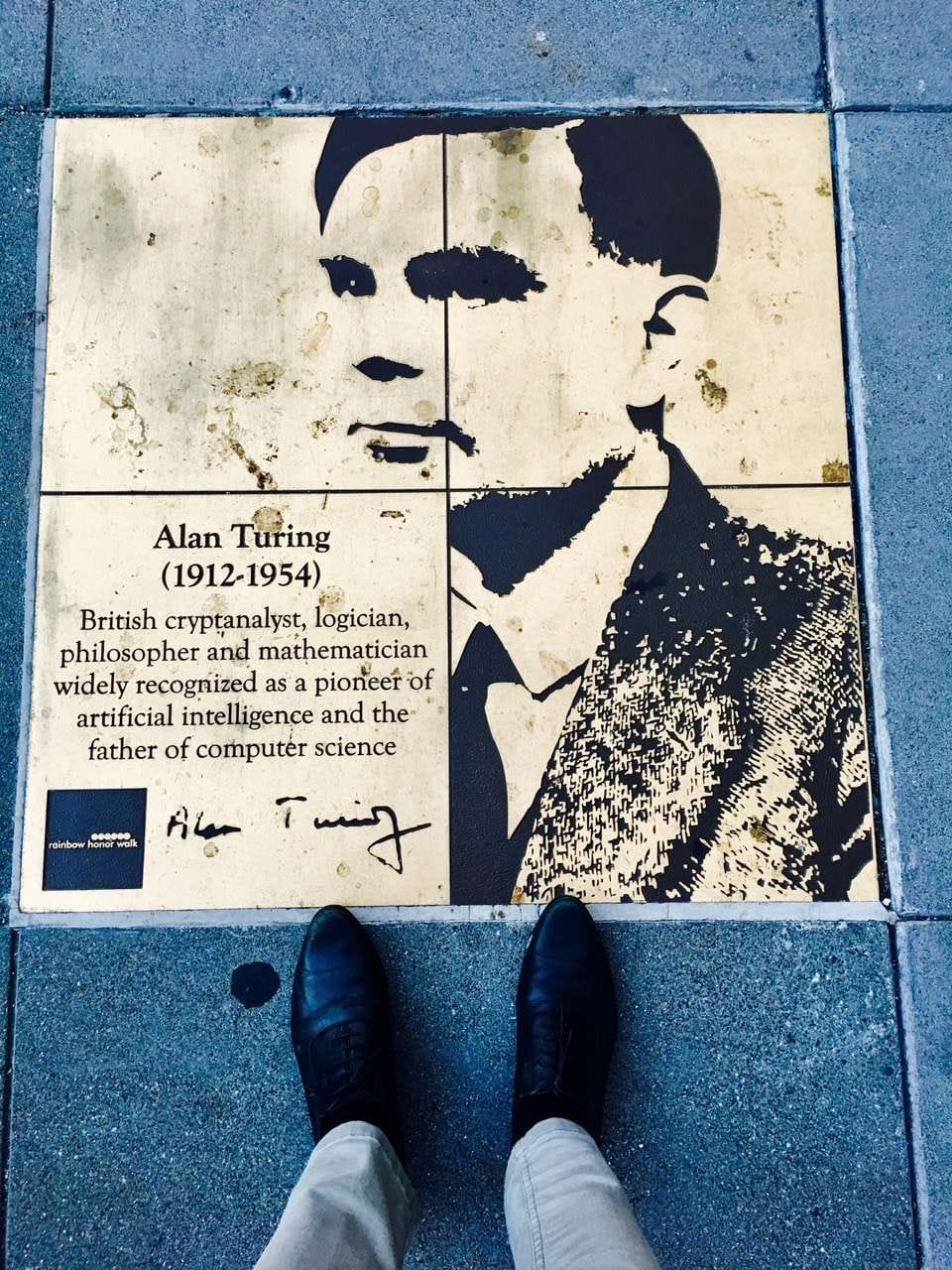

The software engineering profession effectively emerged in the 1950s, amidst the pioneering work of computing luminaries like Turing, Von Neumann, Minsky and McCarthy. With fundamental concepts of modern computer science cemented and "artificial intelligence" coined at the Dartmouth Conference, the first dedicated software developers materialized even absent a formal title. A handful of seminal languages arrived to catalyze programming:

- FORTRAN (1956) - Designed by IBM for scientific computing. The first widely used high-level language, still in use today.

- LISP (1958) - A functional programming language that became a mainstay of AI.

- COBOL (1959) - A language tailored for business data processing, seeing the most extensive real-world application, especially in banking.

- ALGOL 60 (1960) - An early effort to standardize algorithms that profoundly influenced future procedural languages.

A rare elite at the time mastered these arcane tongues for instructing the machine. They were the forebears of software engineers. As we know, invention proceeded apace - from C in the 1970s, C++ in the 1980s, Java in the 1990s to thousands of languages today. Over the past 20 years, an average of 4-16 new languages have emerged annually.

But languages intrinsically resist natural human expression, existing solely as vehicles to communicate with the machine. Like ancient priests monopolizing access to the "gods" by claiming to speak divine tongues, programming languages concentrate power among the few capable of their formulation and fluency.

The seeds for two castes - the programmers and the programmed - were planted even in those pioneering years. The profession that grew out of that fertile decade now shapes much of the modern world.

A Human Coder can produce no more than 1K token per day

A Harvard professor analyzed the economics of highly-compensated Silicon Valley software engineers enjoying average salaries of $220,000 plus over $90,000 in annual benefits per employee.

With an estimated 260 working days per year, this translates to all-in costs of over $1,200 per engineer daily. Yet in terms of measurable output, studies estimate the median to be 0 lines of code committed to repositories per day after accounting for meetings, coordination, debugging failed attempts etc.

Generously boosting that to 100 daily lines of reviewed, working code with 10 tokens per line still only equates to 1000 tokens. How much would this cost if using a GPT-3.5 Turbo 16K to generate the same amount of code? US$0.004 at the time of this writing. Or US$0.12 if using a GPT-4 32K. Yet running an equivalent GPT-4 model would execute in probably 0.001 seconds to yield these tokens, at a cost of 1/10,000th.

So despite full mental exertion on the engineers' part, a mid-tier AI model can match their daily production at virtually no economic cost. This may necessitate reassessing the value proposition of traditional software engineering roles given advancements in automation. Misconceptions around assumed human superiority for certain technical work are dissolving through technological achievements once considered implausible.

Human in the loop is the cause of inefficiency

As any business lead or project head knows, getting a software team to produce efficiently is enormously challenging.

Building a few new app screens? Throw 7 or 8 developers in a room and complications multiply - product folks conceptualizing workflows, UI designers crafting prototypes, front-end and back-end coders splitting works, QA analysts spinning up test harnesses. Prototype quality output? 3 weeks corner-cutting quick-n-dirty hacks. Production-ready? another 3 months for robust production-grade code ensuring forward compatibility and flexibility.

Now consider if GPTs displaced those human coders. The team winnows down to just two roles – a product manager conveying specifications and an auditor reviewing machine-generated outputs. The product lead leverages prompting techniques to feed the AI various ideas and confirm finished products. The reviewer spot checks coding efforts, likely dependent on AI tools themselves to keep pace.

So while still retaining human oversight, team bloat and delays shrink by removing hands-on coding as the bottleneck. Two minds directing AI systems can strategize and manifest substantially faster than platoons of developers confined by manual thinking and typing capacities.ds

Integrating artificial builders exposes the inherent inefficiencies of overloading human coordination. We seem headed toward a future where people focus on high-level goals and constraints while capable software largely handles the dintricate construction details.

The integration of AI into the development workflow is demonstrating how much inefficiency intrinsically stems from human-centric coordination. The future seems headed where people focus on high-level goals and constraints while machines synthesize the intricate details in line with guidance.

Agile Iteration? What Agile Iteration?

The whole notion of agile iteration arose to accommodate fallible human software engineers of varying skill levels. Combined with miscommunications across business stakeholders, product managers and development teams, methodologies like Kanban and Scrum try mitigating uncertainty.

"Where there are people, there are politics." Human collaboration inherently risks derailing software projects through distorted requirements, architectural drifts, and other inefficiencies - it's the most failure-prone component. Thus rituals like sprint planning, review demos, and frequent releases attempt preserving alignment.

But for AI systems, agility equates to compute power. Churning out software revisions becomes a matter of GPU/TPU capacity rather than project management ceremonies. Moreover, artificial developers can iterate tirelessly without rest or distraction - potentially generating and testing hundreds of thousands of code variants a day unimpeded.

So while agile techniques heroically coordinate inconsistent human strengths, orchestrating a platoon of artificial developers would sidestep such constraints entirely. Infinite, automated variation and validation unencumbered by biological limitations suggests software may soon see upgrades at machine speed rather than through rigid, human-paced processes. The very notion of layered process addressing human shortcomings grows obsolete when human hands lift fully off the keyboard.

Architectural Patterns? Refactoring? Irrelevant Now

To tackle complexity in modern software, we've accumulated methods over time - seeking better performance, maintainability, accuracy. As engineers gain domain intimacy, they refactor code to accommodate new realizations and prepare for future needs.

In this pursuit, myriad architectural motifs emerge: MVC, CQRS, Hexagonal, event-driven designs. Coders leverage patterns like Strategies, Proxies, Facades. They adopt processes like DDD, TDD, contract-first. All aim managing sprawl through principled decomposition.

Yet if AI generators now compose code, do these etched abstractions still matter? Will machines heed accumulated human knowledge or rather discover new unforeseen frontiers? AlphaGo unearthed computational possibilities dwarfing millennia of human Go insights - perhaps machine-made software will similarly eclipse the patterns we hold so dear.

Or maybe it's all irrelevant now. Instead of strained big picture grasp, granular management minutiae, political battles over right ways versus wrong, what if an engineer simply prompts: "Refactor this 20K line app" and GPT returns pristine order in 5 minutes? The timeless tension between idealism and pragmatism gets overrides by progress measuring merit in minutes rather than months.

So while once serving as ethical compasses for construction, yesterday's principles and processes fade when engineering leaps from manual discipline to automated outcome. The rituals we devised to work around mortal limits hold less relevance working with artificial angels unbound by such constraints.

The Von Neumann Dividend for Coders Ends

To some degree, generations of software engineers have been beneficiaries of the Von Neumann architecture.

John von Neumann (1903-1957), alongside Alan Turing, pioneered modern computing including foundational concepts like CPUs, memory, instruction sets, I/O still ubiquitous today. His seminal designs informed programming models and languages, operating systems, databases, networks - bedrock elements underlying software engineering. Even recent advances like GPUs and TPUs extend rather than displace the Von Neumann tradition.

Yet how many developers today truly grasp their craft's deep substrate - the mechanical sympathy enabling performant, reliable systems? Abstraction layers now occlude once exposed hardware. Careers concentrate on gluing APIs and business logic in languages nearing natural speech rather than crunching bits via close-to-metal control. The edifice enabling software has faded from conscious concern.

Race car driver Jackie Stewart first coined the notion of "mechanical sympathy" - deeply grasping a vehicle's mechanical intricacies to unlock its capabilities. This concept migrated to software thanks to Martin Thompson, underscoring how performance optimization relies on intimate apprehending the underlying hardware. This orients developers to low-level architectural comprehension rather than just abstraction layers. But now as human instruction gives way to machine learning, such enclosed expertise matters less than learning systems tuned to efficiently self-improve through perpetual assessment. Specialist skills fade as adaptable algorithms advance.

Until now. The advent of large language models further dissolves the remaining facade. Vector databases replace memory. Neural networks supplant CPUs. Agents and functions stand in for I/O and interrupts. Cloud APIs constitute the periphery. An "Natural Language Computer" emerges - still computing by definition but not requiring engineers fluent in arcane machine languages to operate or augment.

Just as self-driving promises to displace drivers relying more on learned skill than mechanical affinity, the democratization of capability diminishes some specialist leverage. Of course exception technical talent will remain, much as expert drivers still have motorsports amidst automation. But the von Neumann pillar propping up programming perplexity for the mainstream gives way to intelligent systems converging with human norms. Machine expertise subsumes manual craft.

Will An 80-Year Profession Vanish?

In a sense, artificial intelligence emerged even before modern computers - Turing laying algorithmic foundations in the 30s/40s with von Neumann pioneering architectures in the 40s/50s. A decade-plus gap separated their breakthroughs. Turing theorized computational possibility before von Neumann unlocked practical machine realization.

One might say software engineers carry the poetic burden of ultimately automating themselves out of their privileged gateway between unfathomable complexity and humble human comprehension. The power once monopolized by wizards interpreting arcane tongues gets democratized through technological self-sacrifice.

And with AI now exponentially self-improving, seemingly stable careers may rapidly transform. If we playfully bracket the epoch of traditional software engineering between the 1950s dawn of early languages to an open question mark in 2030 - will 80 years be all the profession gets?

Surely the coder stereotype - inarticulate, incurious, fumbling through layers of crusty code and gussied up gibberish - won't withstand technological trials ahead. Not when their output gets matched by AI for pennies, customizable through prompted experimentation. But the prospect of hiding away tedious tasks unlocks new potential for that original spirit of pioneership exploring the outskirts of human ingenuity.

Software Engineer of the AI Age

The "classical" epoch of software engineering - translating business needs into coded machine instructions - will soon conclude after a good run. We've enjoyed an improbable 80 year boom in tech employment from the computer revolution's early days. Studying computer science, grounded in logic and algorithms, has afforded financial stability for generations.

But as language models advance, many roles focused on textual manipulation will erode. Coders without curiosity into underlying architectures and principles will struggle as artificial intelligence handles rote production and maintenance. Still, those embracing mechanical sympathy and first principles carry technology forward by creating, not just operating.

We owe gratitude for careers solidified through programming's industrial revolution. Yet the field now evolves as machines adopt context and language. Manual translation gives way to high-level conversation. We relinquished physical manufacturing to automation long ago - soon we may cede software's mental assembly.

Of course many niche technical roles will remain, much as elite athletes still exist alongside autonomous transportation. But for the mainstream, progress simplifies rather than complicates. We now welcome an emerging era where technologists coach cognitive systems in desired behaviors rather than intensely focus on step-by-step logistics. The hands-on work gets gradually replaced by minds-on oversight.